17 Aug 2012

0 CommentsThe Bun Reunion “AfterMath”

After welcoming people to the 050th Reunion of the ‘Bun and other 1970’s computing at University of Waterloo in mid-August 2012, I’ve gathered together a photo album, the brief presentation from the Gala and the many comments received outside of the earlier blog post.

Before the Gala, almost 100 photos were gathered which have grown to almost 250 contributed by various attendees. Enjoy browsing the memories.

- Dave conroy

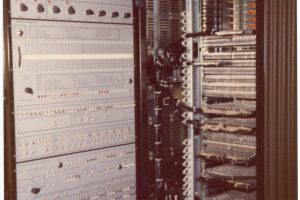

- Sytem controller

- Card Reader

- Line Printer

- Removable Disc Driver

- Randall Howard

- Dave Buckingham

- Charles Forsyth @ Math/Unix

- Eric Manning

- Dave Martindale

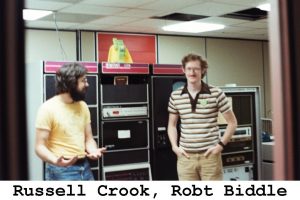

- Robert Biddle

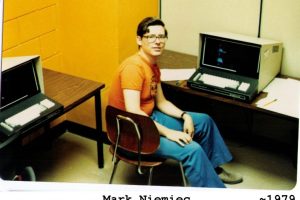

- Mark Niemiec

- Jim Gardner

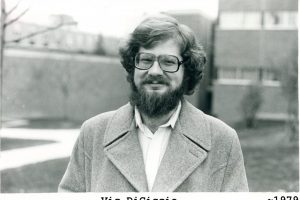

- Vic DiCiccio

- Dave Martindale

- Peter & Sylvia Raynham

- Wendy Nabert Williams

- Rohan Jayasekera

- Hide Tokuda & San-Qui Li

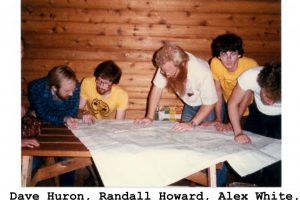

- Dave + Randall @ Mark Williams

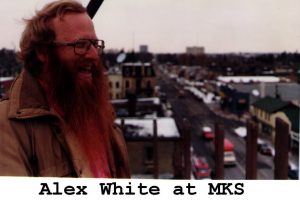

- Alex White

- Randall @ MKS

- The Hacks @ Randall&Judy Wedding

- Math Building

- Morven Gentleman

- Morven Gentleman

- Eric Manning

- Eric Manning

- Ciaran O’Donnell

- Michael Dillon

- Peter & Flaurie Stevens

- Rick Beach

- Rick Beach

- Brad Templeton

- Brad Templeton

- Brad Templeton

- Ian Chaprin

- Ron & Amy Hansen

- Jon Livesey

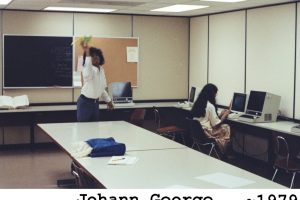

- Johan George

- Kelly Booth

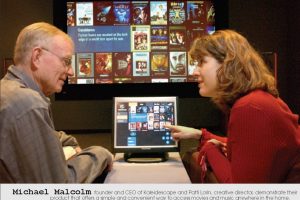

- Mike Malcolm

- Ian! Allen

- Linda Carson

- Linda Carson

- Dan Dodge

- Dave Huron

- R Anne Smith

- Trevor J Tho,mpson

- Math Building

I’ve also included the brief presentation from the Gala on Saturday 18 August, 2012 in case anyone wants to see that:

Finally, there was a lively discussion via email, Facebook, Google+, LinkedIn and Twitter both from attendees and those who were unable to join us. The following is a summary of some of those reflections and comments:

Randall,

The first story that comes to mind is how we got the Bun in the first place.

In 1971, Eric Manning and myself as young faculty members felt that it was embarrassing that a university which wanted to pride itself on Computer Science did not have any time-sharing capability, as all the major Computer Science schools did.

At the time, the Faculty of Mathematics was paying roughly $29,000/month to IBM for a IBM 360/50, which was hardly used at all – it apparently had originally been intended for process control, but that never happened. (Perhaps the 360/50 had been obtained at the same time as the 360/75 – I never knew.) So Eric and I approached the dean with a proposal to see if those funds could be diverted to be spent instead on obtaining time-sharing service. The dean approved us proceeding to investigate the options.

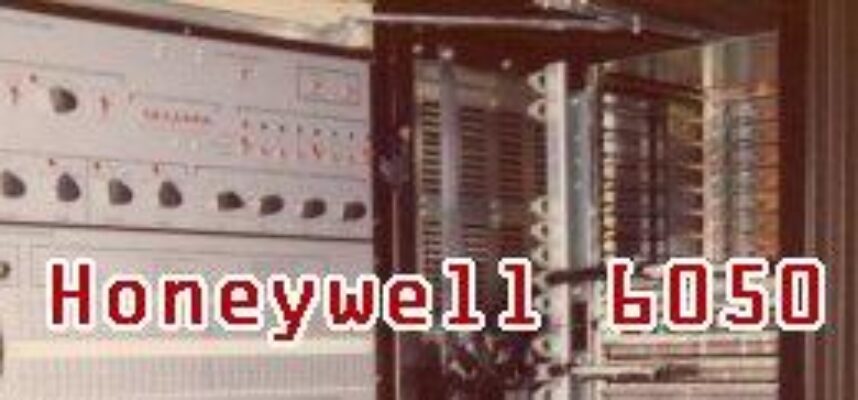

The popular time-sharing machine of the day in universities was the DEC PDP-10, so we wired a spec to get one, but issued the RFP to all vendors. In the end, we received bids from IBM, Control Data, Univac, DEC, and Honeywell. IBM bid a 360/67 running TSS 360 at more than twice what we were paying for the 360/50, and at the time only the University of Michigan’s MTS software actually worked at all on the machine: the bid was easily dismissed. Control Data bid a CDC 6400 at above our budget, but at the time didn’t have working time-sharing software: again easily dismissed. Univac bid an 1106, again above our budget, and although its OS, Exec 8, had some nice aspects as a batch system, we had no awareness of time-sharing on it: so we dismissed it too. DEC bid a KA 10 almost exactly at our budget: this was what we originally wanted, so it made the short list. Honeywell bid the 6050 for $24000/month, notable savings for the Faculty, and since I had used GCOS III at Bell Labs, I knew that even if not ideal, it would be acceptable: again on the short list.

Announcing the short list had a dramatic effect. DEC was so sure that they would win that they revealed that, as was their common practice in that day, they had low-balled the bid, and a viable system was actually going to cost $32,000/month.

Honeywell instead sweetened their bid – more for the same money, and the opportunity for direct involvement with Honeywell’s engineering group in Phoenix. Whereas with DEC we would be perhaps thousandth university in line, and unlikely to have any special relationship, Honeywell only had three other university customers: MIT, who were engrossed with Multics; Dartmouth, who had built their own DTSS system; and the University of Kansas, who had no aspirations in software development – we would be their GCOS partner.

The consequence was there was no contest. The Faculty cancelled the 360/50 contract and accepted Honeywell’s bid. I agreed to take on the additional responsibility for running the new time-sharing system. The machine had already been warehoused in Toronto, so it was installed as soon as the machine room on the third floor could be prepared.

Morven (aka wmgentleman)

Hi Randall

Yes, all’s well here. I was mandatorily retired from UVic but continue to work on various projects for the Engineering Faculty, and a bit of consulting etc. Engineering has no end of interesting things to work on.

I’m very sorry that I can’t attend your Unix/Bun/CCNG celebration; the mark we made certainly should be celebrated!

I’m distressed about the crash & burn of Nortel and now RIM, and I certainly wish you well in keeping the tech sector alive and well.

Rocks, logs and banks alone do not a healthy economy make.

All the best

Eric

Randall,

Unfortunately I have to be in Seattle at that time. It does sound like a good time will be had. I would especially like to go to the Heidelberg again (which I did have the occasion to do in 2001 [or so]).

After Waterloo, I did time at BTL (in Denver, working on real-time systems) then wound up at Sun in charge of the Operating Systems and Networking group — putting me in charge of what was arguably the best set of UNIX people ever assembled. Had a number of other adventures after Sun, and finally decided to retire when the people I was hiring were more interested in how much money they could make than in what they would be doing. Guess I was spoiled by the Sun people I managed.

I have a “blog” updated quarterly for friends and family: http://bclodge.com/index.htm

Do look us up if you are ever in this area (Bozeman, MT). Some memories:

One day some malicious (and uncreative) person copied down a script that was known to crash UNIX by making it essentially unusable. It went something like:

while true

do

mkdir crash

cd crash

done

Some subset of the hacks (I forget which) spent a great deal of time trying to figure out how to undo the damage. The obvious things did not work. They finally decided to go to dinner and think about it. I stayed and thought of a way to fix the problem; I finished the fix just as they returned. They wanted to know how I did it. I never told them and am still holding the secret (it was a truly disgusting hack).

Anyhow, the hacks I most remember (other than yourself) were Ciaran O’Donnell (on LinkedIn), Dave Conroy, the underage Indian kid whose name escapes me at the moment, and one more Dave (Martindale?).

Some more stories:

Someone from Bell Labs came to give a talk about text to voice and gave a demo by logging in via phone modem to the Bell Labs computers. The hacks looked at the phone records and figured out how to log in to the BTL system. Suddenly our Math/UNIX system was getting all the latest new UNIX features before they were released (by means unbeknownst to me). The BTL people weren’t terribly happy when they found out, but they were happy to accept a guarantee it would stop.

We kept trying to use the IBM system to do printing with a connection to one of their channels (I think they were called). It would frequently stop working and someone would have to call the operator and say “restart channel 5” (or some other jargon). I had a meeting with the IBM staff to see if we could get the problem fixed. At that meeting I recall one of the staff was incredulous that our system did not reboot when they rebooted the IBM mainframe. Anyhow, they were reluctant to fix the problem so I told them I would fix it by buying a voice synthesizer (as demonstrated by BTL) and have our system call their operator to instruct them to “restart channel 5”. They fixed the problem.

The worst security problem I recall someone finding in the ‘Bun’ was to do an execute doubleword where the second word was another execute doubleword. Execute double word disabled interrupts — this was a way of

executing indivisible sequences of instructions. By chaining this way for exactly the right amount of time (1/60 second I think) and doing a system call as the last instruction, there would be a fault in the OS for disabling interrupts too long and the system would crash. I don’t know if anyone ever figured out a fix since this was essentially hardwired into the machine.

I assume there will be pictures, etc from the event….

Gary (aka grsager)

Richard Sexton

Richard Sexton: I still used the ‘bun for a few months when I moved to LA in 79 (x.25 ftw). I’d love to be there but can’t make it that day but I promise when there’s a similar event for math unix I will be there; that was the first (and I sometimes think only) decent computer I ever used.

When I worked with Dave Conroy summers at Teklogix, we worked for Ted Thorpe who was the Digital sales guy running around selling the same machine to six different universities just so they could sign for it at the shipping dock and get it on *this* years budget. Ted would then take the machine to the next school until Digital has actually made enough they could ship all the ones that had actually been ordered.

Stefan Vorkoetter: Wow! I remember using that machine in the 80s. It must have been kept alive for quite a while if it was installed 40 years ago.

Judy McMullan: It was decommissioned Apr 23, 1992

Brenda Parsons: The 6050 or the Level 66 or the DPS8 — wasn’t there a hardware change in there somewhere before ’92?

Jan Gray: Thanks for explaining the S.C. Johnson connection. I had no idea how the Unix culture came to Waterloo.

Check out Thinkage‘s GCOS expl catalog for real down-memory-lane fun: http://www.thinkage.ca/english/gcos/expl/masterindex.html

I was just a young twerp user, but I fondly remember the Telerays and particularly rmcrook/adv/hstar. As well as this dialog (approximate) :-

Sorry, colossal cave is closed now.

> WIZARD

Prove you’re a wizard? What’s the password?

> XXXXX

Foo, you are nothing but a charlatan.c

.r

..a

…s

….h_

Random musings from the desk of Ciaran O’Donnell when he should be working

I would especially like to thank my dear friend Judy McMullan for organizing this wonderful reunion.

I am so glad to have gone to a University that was born the same year as me, that taught you Mathematics, that did not force you to program in Cobol or use an IBM-360, and that paid people like Reinaldo Braga to write a B compiler. It was nice to have L.J. Dickey teach you about APL in a world before Excel and to learn logic and geometry.

It was so nice to go to university, to not have to own a credit card or a car, to be able to wash floors at the co-op residence, and to pay tuition for the price of a 3-G iPad today. It was not so bad either not to get arrested for smoking pot or crashing the Honeywell main frame even though one was quite a nuisance, or to play politics on the Chevron.

It was so neat to be mentored by people like Ernie Chang and Jay Majithia. The University of Waterloo in the 1970s is an unsung place of great programming. I just have to look at what people like Ron Hansen accomplished designing a chess program or what David Conroy has become. As for myself, I have actually learned C++ and Java which proves that you can teach an old dog new tricks.

How things have changed. Back then, we kicked the Marxist-Leninists off the Chevron. Nowadays, communist officials from China can come to America and get a heroes welcome at a Los Angeles Lakers game. All I will say about my life since 1979 is that I have been in France is … “I KNOW NOTHING” like Sgt. Schultz from Hogan’s Heroes.

I am especially grateful to Steven C Johnson for having inspired me to get into compilers and to Sunil Saxena for having encouraged me to come to California.

There are a lot of fun people down here from Waterloo including myself, Peter Stevens, Rick Beach, Sanjay Rhadia, David Cheriton, Dave Conroy, Kent Peacock, Sunil Saxena, John Williamson, and a whole bunch of others.

Ciaran (aka cgodonnell)

Sadly, I am not going to make it. It was touch and go right to the end, but I have to go to DC to be a witness in an ITC dispute.

Lynn and I will try and sync with the group online on Saturday.

dgc

Photo Courtesy Jan Gray

Photo Courtesy Jan Gray

Bill Gates, partly at the instigation of Warren Buffet who added his personal fortune to that of Gates, left Microsoft, the company he built, to dedicate his life to innovative solutions to large world issues such as global health and world literacy through the

Bill Gates, partly at the instigation of Warren Buffet who added his personal fortune to that of Gates, left Microsoft, the company he built, to dedicate his life to innovative solutions to large world issues such as global health and world literacy through the  Started by Paul Brainerd, Seattle-based

Started by Paul Brainerd, Seattle-based  Jeffrey Skoll, a Canadian-born billionaire living in Los Angeles and an early employee of

Jeffrey Skoll, a Canadian-born billionaire living in Los Angeles and an early employee of  Waterloo’s own Mike Lazaridis aims to transform our understanding of the universe itself by investing hundreds of millions of dollars into

Waterloo’s own Mike Lazaridis aims to transform our understanding of the universe itself by investing hundreds of millions of dollars into

(l->r) Ralph Deiterding, Eric Palmer, Ruth Songhurst, [TSX VP], Randall Howard, Tobi Moriarty, Mike Day, David Rowley

(l->r) Ralph Deiterding, Eric Palmer, Ruth Songhurst, [TSX VP], Randall Howard, Tobi Moriarty, Mike Day, David Rowley MKS Team (circa 1992) – Old Post Office, Waterloo

MKS Team (circa 1992) – Old Post Office, Waterloo

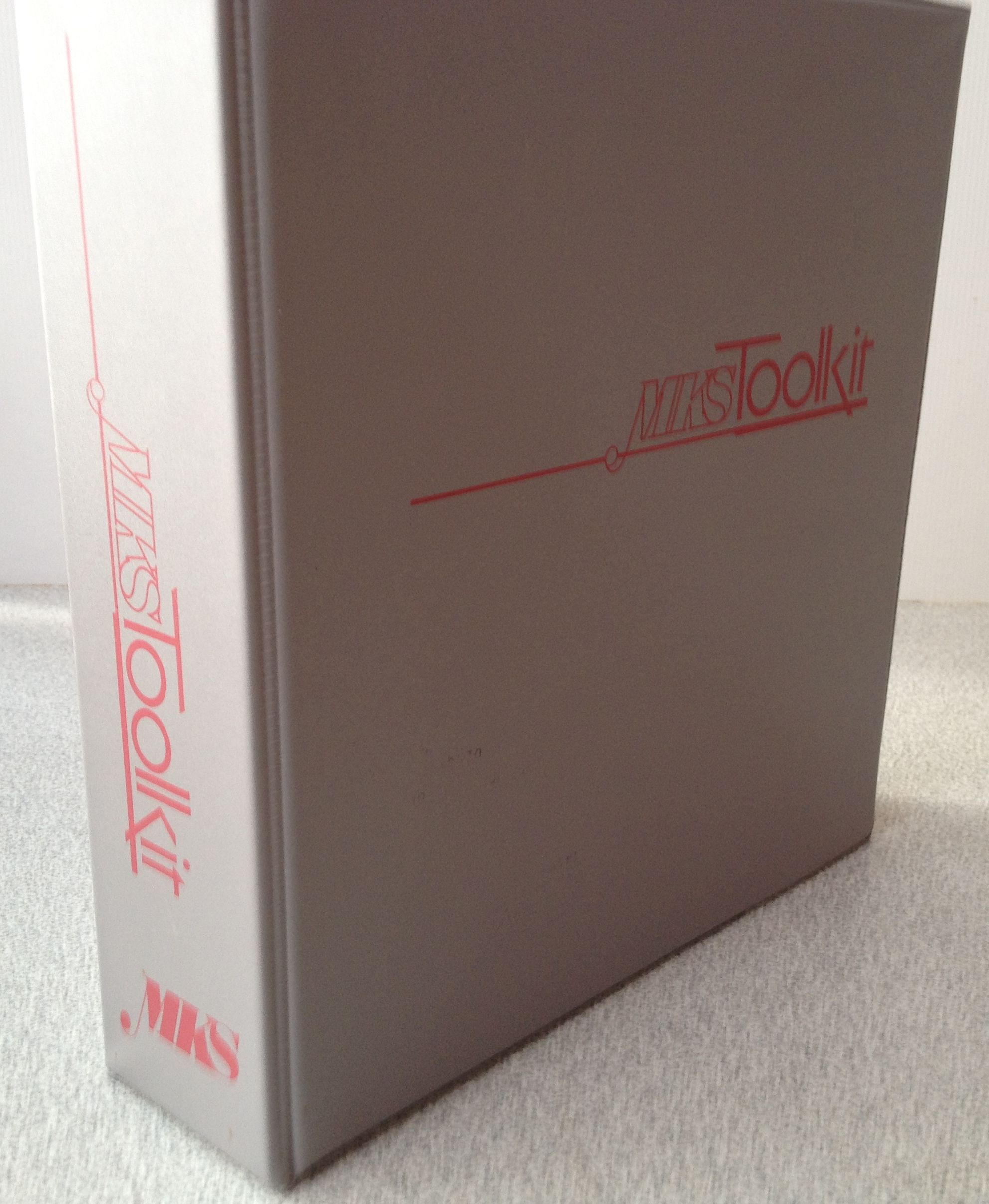

In 1984, I co-founded MKS with Alex White, Trevor Thompson, Steve Izma and later Ruth Songhurst. Although the company was supposed to build incremental desktop publishing tools, our early consulting led us into providing UNIX like tools for the fledgling IBM PC DOS operating environment (this is a charitable description of the system at the time). This led to MKS Toolkit, InterOpen and other products aimed at taking the UNIX zeitgeist mainstream. With first commercial release in 1985, this product line eventually spread to millions of users, and even continues today, surprising even me with both its longevity and reach. MKS, having endorsed POSIX and x/OPEN standards, became an open systems supplier to IBM MVS, HP MPE, Fujitsu Sure Systems, DEC VAX/VMS, Informix and SUN Microsystems.

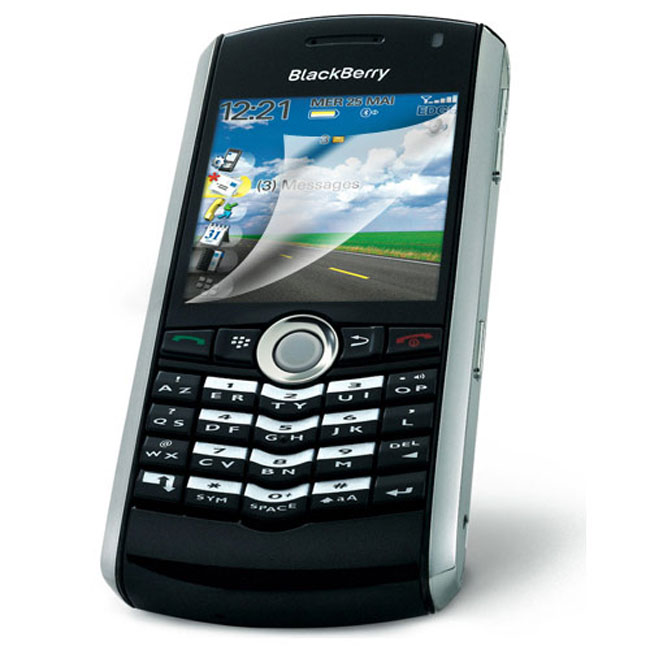

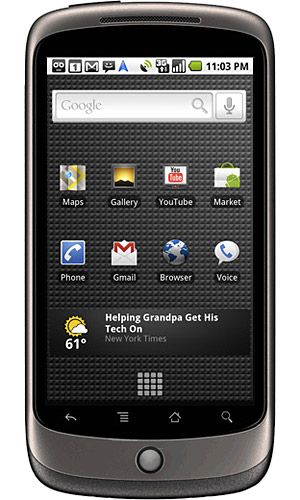

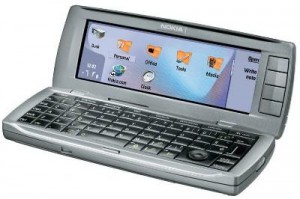

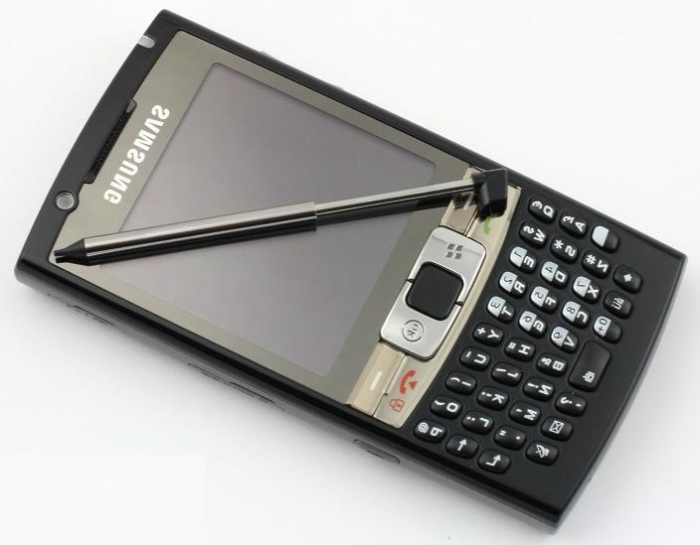

In 1984, I co-founded MKS with Alex White, Trevor Thompson, Steve Izma and later Ruth Songhurst. Although the company was supposed to build incremental desktop publishing tools, our early consulting led us into providing UNIX like tools for the fledgling IBM PC DOS operating environment (this is a charitable description of the system at the time). This led to MKS Toolkit, InterOpen and other products aimed at taking the UNIX zeitgeist mainstream. With first commercial release in 1985, this product line eventually spread to millions of users, and even continues today, surprising even me with both its longevity and reach. MKS, having endorsed POSIX and x/OPEN standards, became an open systems supplier to IBM MVS, HP MPE, Fujitsu Sure Systems, DEC VAX/VMS, Informix and SUN Microsystems. During my later years at MKS, as the CEO, I was mainly business focussed and, hence, I tried to hide my “inner geek”. More recently, coincidentally as geekdom has progressed to a cooler and more important sense of ubiquity, I’ve “outed” my latent geek credentials. Perhaps it was because of this, that I rarely thought about UNIX and the influence that talented Bell Labs team, including Dennis Ritchie, had on my life and career. Now in the second decade of the 21st century, the world of computing has moved on to mobile, cloud, Web 2.0 and Enterprise 2.0. In the 1980’s, after repeated missed expectations that this would (at last) be the “Year of UNIX” we all became resigned to the total dominance of Windows. It was, in my view, a fatally flawed platform with poor architecture, performance and security, yet Windows seemed to meet the needs of the market at the time. After decades of suffering through the “three finger salute” (Ctrl-ALT-DEL) and waiting endlessly for that hourglass (now a spinning circle – such is progress), in the irony of ironies UNIX appears on course to win the battle for market dominance. With all its variants (including Linux, BSD and QNX), UNIX now powers most of the important Mobile and other platforms such as MacOS, Android, iOS (iPhone, iPad, iPod) and even Blackberry Playbook and BB10. Behind the scenes, UNIX largely forms the architecture and infrastructure of the modern web, cloud computing and also all of Google. I’m sure, in his modest and unassuming way, Dennis would be pleased to witness such an outcome to his pioneering work.

During my later years at MKS, as the CEO, I was mainly business focussed and, hence, I tried to hide my “inner geek”. More recently, coincidentally as geekdom has progressed to a cooler and more important sense of ubiquity, I’ve “outed” my latent geek credentials. Perhaps it was because of this, that I rarely thought about UNIX and the influence that talented Bell Labs team, including Dennis Ritchie, had on my life and career. Now in the second decade of the 21st century, the world of computing has moved on to mobile, cloud, Web 2.0 and Enterprise 2.0. In the 1980’s, after repeated missed expectations that this would (at last) be the “Year of UNIX” we all became resigned to the total dominance of Windows. It was, in my view, a fatally flawed platform with poor architecture, performance and security, yet Windows seemed to meet the needs of the market at the time. After decades of suffering through the “three finger salute” (Ctrl-ALT-DEL) and waiting endlessly for that hourglass (now a spinning circle – such is progress), in the irony of ironies UNIX appears on course to win the battle for market dominance. With all its variants (including Linux, BSD and QNX), UNIX now powers most of the important Mobile and other platforms such as MacOS, Android, iOS (iPhone, iPad, iPod) and even Blackberry Playbook and BB10. Behind the scenes, UNIX largely forms the architecture and infrastructure of the modern web, cloud computing and also all of Google. I’m sure, in his modest and unassuming way, Dennis would be pleased to witness such an outcome to his pioneering work.

My current device usage pattern sees a Blackberry as my core device for traditional functions such as email, contacts and phone and my iPhone for for the newer, media-centric use cases of web browsing, social media, testing and using applications, and so on. Far from being rare, such carrying of two mobile devices seems to be the norm amongst many early adopters. Some even call it their “guilty secret.”

My current device usage pattern sees a Blackberry as my core device for traditional functions such as email, contacts and phone and my iPhone for for the newer, media-centric use cases of web browsing, social media, testing and using applications, and so on. Far from being rare, such carrying of two mobile devices seems to be the norm amongst many early adopters. Some even call it their “guilty secret.”

2 Sep 2012

0 Comments[Book Review]: The Innovator’s Dilemma

The innovator’s dilemma by Clayton M. Christensen

Published by HarperBusiness

WorldCat • Read Online • LibraryThing • Google Books • BookFinder

This summer I took time to re-read an oft-overlooked volume that I believe to be the essential to anyone working in marketing and innovation. In this review, I’ll provide a few examples of why this book needs more attention, particularly here in Canada where we definitely need to up our game in marketing of innovation and technology.

Clayton Christensen, as Associate Professor of Business Administration at Harvard Business School, is a leading academic researcher on innovation. Yet, he still manages to provide practical and pragmatic strategies that real companies can use. And, most importantly, his theoretical groundwork is based on extensive, data intensive research over longer period of time with real companies and markets going through disruptive innovation.

The latter term is often thrown around lightly in technology company circles. A Disruptive technology (or innovation) typically has worse product performance in mainstream markets while having key features that interest fringe and merging markets. By contrast, sustaining technologies provide improved product performance (and often price) in mainstream markets.

The book covers real markets, including the various generations of disk drives starting with 14″ drives in the 1970’s to today’s 2.5″ (and smaller) drives. By studying hundreds of companies that emerged, thrived and failed over a 25 year period, some clear patterns emerge. Further examples across a broad range of markets, include he microprocessor market, the transition from cable diggers to hydraulic “backhoes”, accounting software and even the transition of industrial motor controllers from mechanical to electronic programmable models.

The key message of the book is that the playbook for normal (“sustaining”) technology innovation must be thrown away for disruptive technologies. Disruptive technologies break traditional rules in many, often counter-intuitive ways:

Entrepreneurial writings, not to mention my own experience, encourage us to celebrate failure. Beyond the power of learning by trial and error, The Innovator’s Dilemma, for the first time, provides an analytical framework as to why such failure is so critical in new markets.

One area where the book could provide more guidance is that of differentiating disruptive from sustaining technologies. Such discrimination is absolutely critical to ensure the right strategic approach to the new technology is adopted. Generally easy with the benefit of hindsight, such determination can be very tricky, and error prone, when first confronted with such new technologies.

This is a book that anyone working with products in fast moving markets needs to re-read regularly. It surprises me that, 15 years after publication, how few product marketers and senior executives appear to have benefited from the deep wisdom Christensen imparts.